menu

Four - dimensional videos

More info: mathiasnft.blogspot.com/

Video sequences shown with volumetric rendering. Video frames are layered in the z-axis with a time offset, giving the impression of almost being a four dimensional video or long exposure photogrammetry. It feels like a video not confined to a "2D space". It's almost like a 3D representation of a four dimensional object. You can "see" time / the trajectory of objects.

With this video i chose to do cars moving towards / away from the camera as it moves in the same way as the frames are moved in 3D space. E.g. movement that does not follow the motion of the frames will be distorted. The way this was made was to isolate the car lights by shifting the alpha channel to the luminance channel. By leaving only the lights it becomes more similar to how long exposure photography works.

Another version with a crosswalk. Same type of motion just a different subject.

Process

This project was the result of a series of experiments related to the concept of time. The first few experiments where tied to "temporal distortion", which was basically a slitscan experiment connected to your cursor. Here you could manipulate real time video with cursor strokes and get a peek into the recent past.

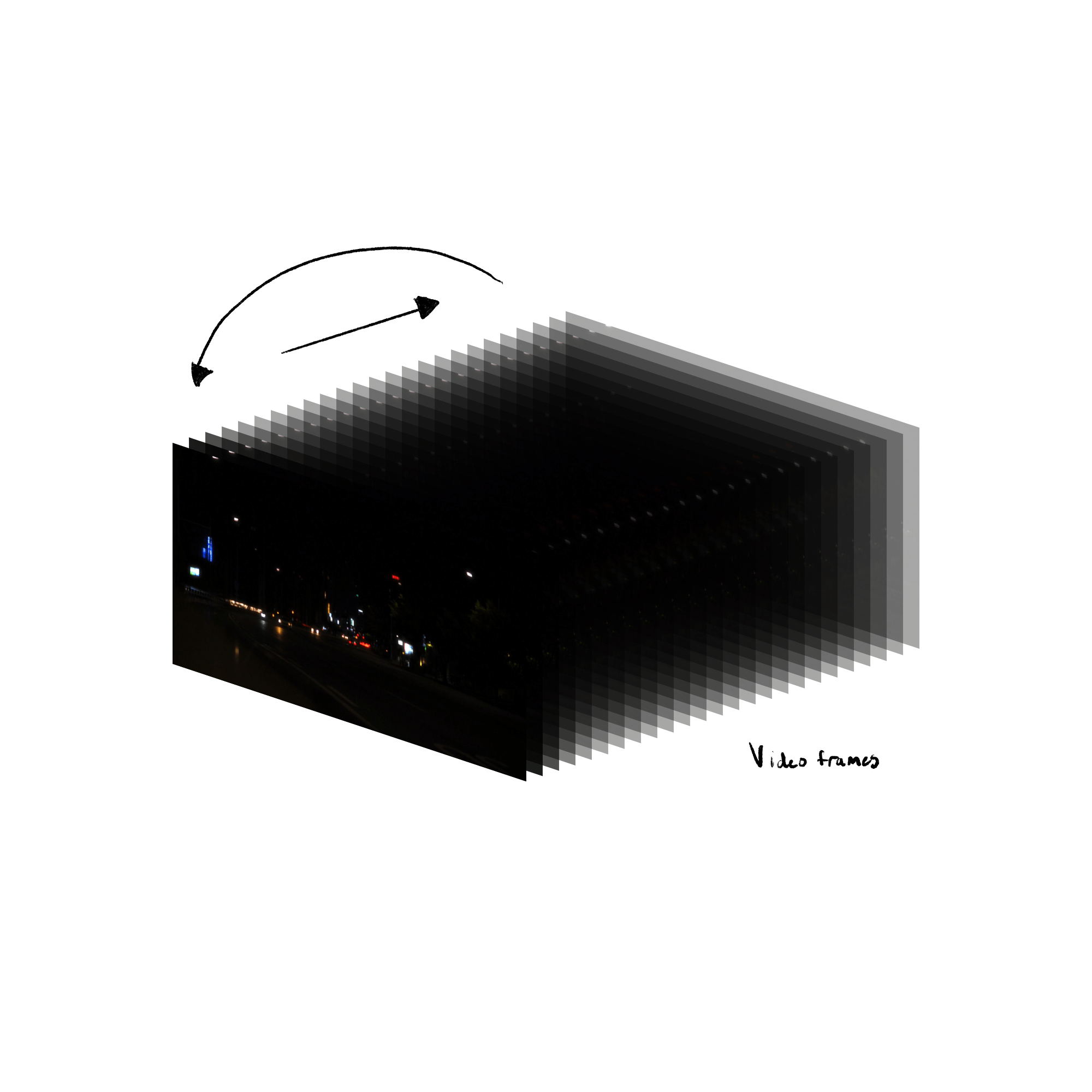

To develop this further I tried to apply the slitscan concept just to 3D, which is basically Volume rendering. Volume rendering / voxel rendering is a way to render 2D "cross sections" as 3D forms. In the final experiment, instead of using a dataset of slices, a video sequence is used. This was made using the ofxVolumetrics plugin for Openframeworks.

Below you can see how the video frames are placed in 3D space.

↩︎ previous project

next project